Look beyond “base-level” security to build a valuable container security company

Preeti Rathi - March 3, 2016 - 0 Comments

Much has been written about how containers are porous – that they are not as secure as virtual machines. Infact, a while ago, I penned a blog about how security is the weakest area of containers. What a difference a few months have made – Docker, CoreOS and quite a few other groups have been heads-down on tightening container security and have announced numerous initiatives to make containers secure.

Let’s begin with Docker. A few weeks ago, the company announced the acquisition of Unikernel Systems. The core idea with unikernels is to strip down the operating system to its bare minimum based on the requirements of an application so that it can run that very specific application. This elimination of unnecessary code results in multiple benefits –

- Reduces attack surface of the application

- Smaller memory footprint

- Faster boot time

(source: unikernels.org)

With the acquisition of Unikernel systems by Docker, unikernels will gain widespread adoption, effectively making unikernels just another type of container. Thus any application for which security and efficiency is crucial, they have a solution in unikernels.

Docker also recently announced support for seccomp (secure computing) & SELinux (security enhanced linux). Seccomp is a method that allows to control an application’s behavior by limiting which system calls are permitted by an application. Without seccomp, an application can run any system call supported by the kernel and the user can try any parameters they want until they find an exploit. The challenge that still remains though is for system administrators to figure out in advance, what system calls can be made by which applications.

SELinux on the other hand is a mechanism for supporting access control security policies.

A few months ago, Docker also announced DCT (Docker Content Trust). This initiative is focused on a different aspect of container security – validating the identity of the container’s publisher and its content. DCT works by using a two-key mechanism, ensuring that the publisher signs her work before making it available publicly and the users are able to verify this signature.

CoreOS, which has always strongly emphasized the importance of security, has taken a different approach to security with their announcement of DTC (distributed Trust computing). Using DTC, CoreOS says you can:

- Validate and trust individual node and cluster integrity, even in potentially compromised or even hostile data center conditions

- Verify system state before distributing app containers, data or secrets

- Prevent attacks that involve modifying firmware, bootloader, the OS itself, or the deployment pipeline

- Cryptographically verify, with an audit log, what containers have executed on the system

The boot process – from the very first step of powering on the system all the way to user login – is subjected to cryptographic validation and can execute only if it can validate with a public key, held in firmware.

Then, there are companies like Intel, that are focused on improving the security of containers via hardware modifications. The company announced a solution called “clear containers” that provides hardware hooks and software shortcuts to speed up containers while providing virtual machine security. Instead of staying “general purpose” and being able to emulate any OS, they chose kvmtool as their hypervisor and focused on optimizing the QEMU layer to offer a smaller footprint and faster boot times.

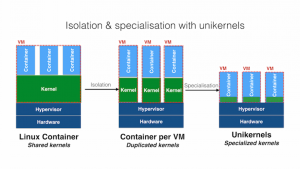

Finally, quite a few initiatives have been announced in recent times that focus on deliberately blurring the line between a lightweight, fast container engine and a VM that offers strong isolation, to offer the best of both.

Examples of these would be HyperD, Canonical’s LXD, the experimental Novm project, and Joyent’s Triton Elastic Container Host. The line will likely remain blurred, as companies experiment with combining the best of both worlds to provide products/projects that are focused on solving a range of requirements.

To give an example, here’s HyperD’s view of things –

(source: hyperd.org)

Containers are currently in the state of evolution, and which of these initiatives/products gain momentum remains to be seen, but what seems clear is that container security will be at par with VMs at some point in future. Thus, the base-level security of containers is table stakes and startups that are focused on the security aspect of containers should identify areas beyond the basic container security to build a valuable company.